How do multiple parallelism techniques compose together for large-scale pretraining? A walkthrough of hybrid parallelism.

Deep Learning, Training Framework, Data Parallelism, Pipeline Parallelism, Tensor Parallelism, Hybrid Parallelism

Many illustrations and explanations of hybrid parallelism show everything in one graph, yet it can be challenging to understand why all these parallelism strategies align in a particular way. In this blog, I aim to bridge that gap by walking through each concept from scratch, based on ideas discussed in my earlier posts. I assume you are already familiar with standard parallelism techniques, including Data Parallelism (DP), Tensor Parallelism (TP), Pipeline Parallelism (PP), and Sequence/Context Parallelism (SP/CP).

---

### **1. DP**

Let’s begin with Data Parallelism (DP). Each “DP unit” corresponds to one complete replication of the model.

- For small to medium-sized models (up to a few billion parameters), you may not need a multi-node setup to contain a model replication: simply let each GPU store one model replica.

- Advanced DP implementations like ZeRO (DP-Zero) or Fully Sharded Data Parallel (FSDP) help further reduce memory usage in single-node settings, but to keep things straightforward, let’s assume each DP unit holds a full model replica without sharding for the rest of this blog unless noted otherwise.

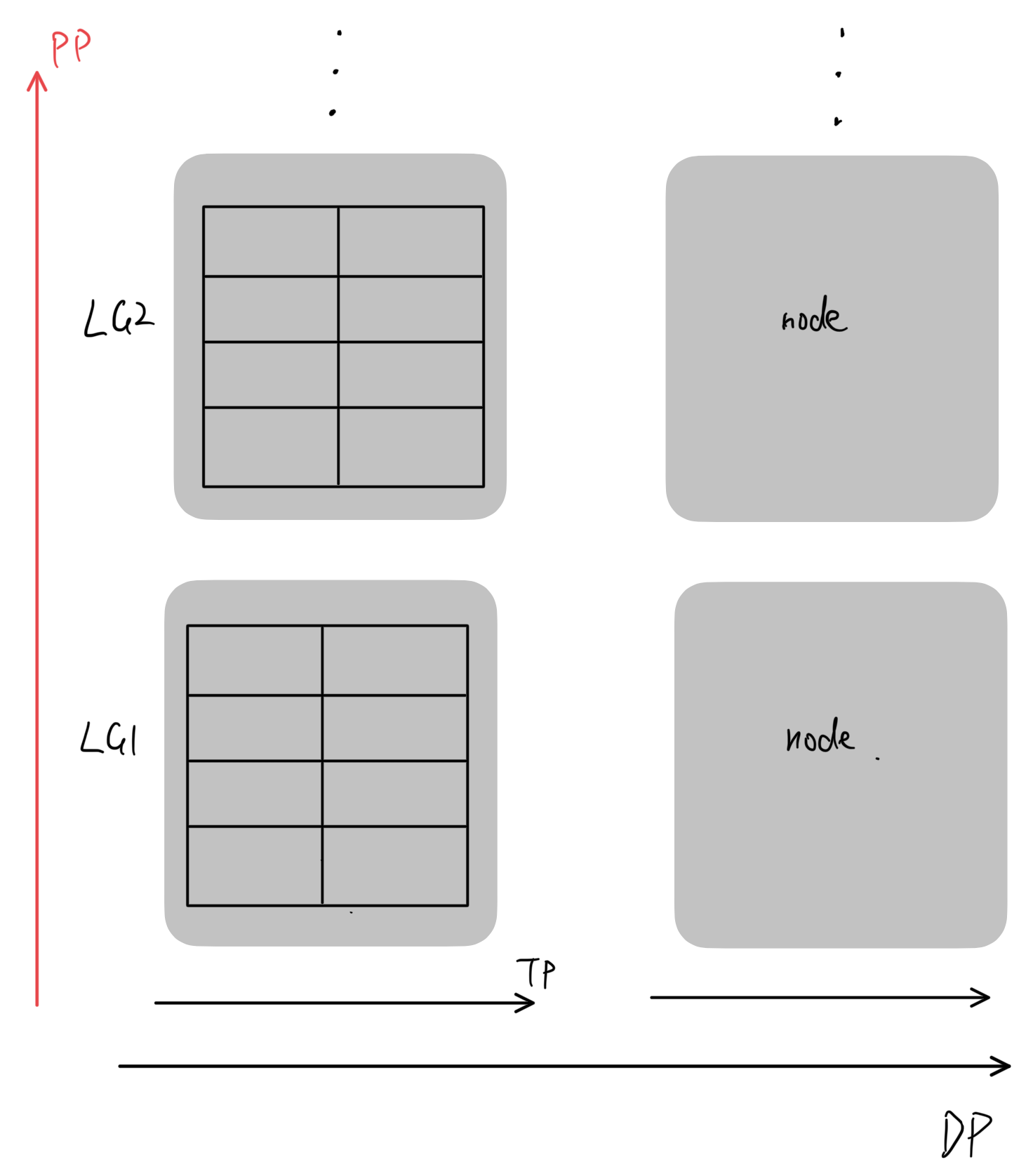

Hence, for `DP=2` on two nodes (one model replication per node), as a typical data center node has 8 GPUs, I illustrate it as follows:

> *Remember*: “DP” can also represent FSDP/DP-Zero. For simplicity, we stick to the idea of full model replication in each DP unit throughout this discussion unless noted otherwise.

---

### **2. DP + TP (Intra-Layer Parallelism)**

In practice, many "medium-scale" training happens with DP + TP with each node fits one model replication as TP utilizes the most inter-GPU communication for fast matrix multiplications.

This approach involves:

- Each node storing a full copy of the model (conceptually, though advanced methods may shard some elements across nodes).

- Within each node, the model’s parameters are partitioned row-wise or column-wise within each layer (intra-layer) for parallel execution. These partitions communicate intensively during operations like matrix multiplications or attention blocks.

This is particularly useful for “medium-scale training,” where the model can fit into the total GPU memory of a single node, but still needs TP for efficient matrix operations. At this stage, to speed up training, you typically increase the DP size (i.e., add more nodes) while keeping TP fixed within each node.

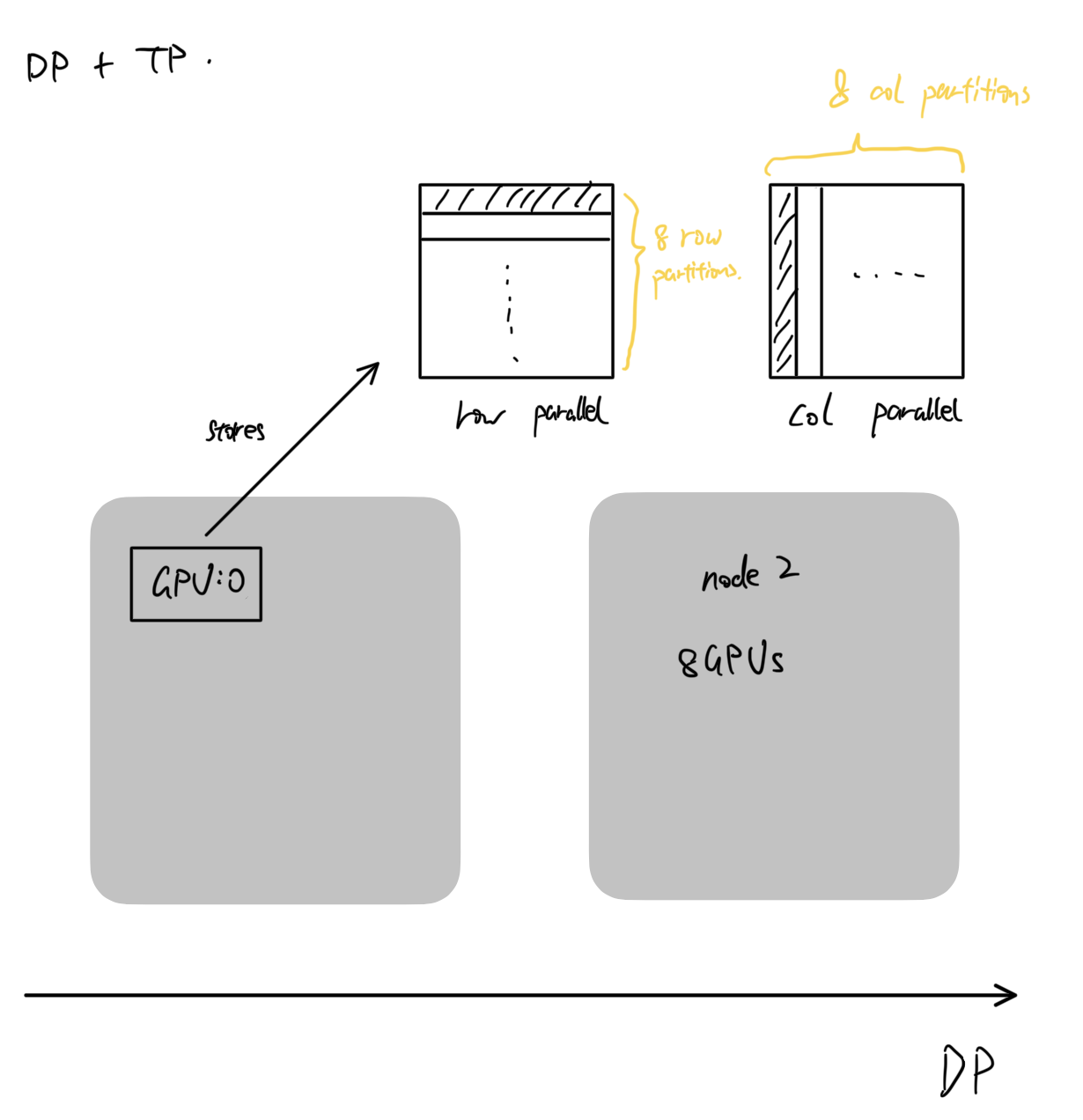

Below is a logical view of “medium-scale training on two nodes,” using `TP=8`:

It also includes an example analysis on what one GPU stores in this case. `GPU:0` stores 1/8 partition of model parameters, and whether row-wise or column-wise paritions depend on TP operations.

#### "medium-scale" training

One might ask how do you measure the number of parameters suitable for medium-scale training? A simple math with knowledge from memory estimation blog will do the job.

$$

\text{model params. count}=\frac{\text{one node's memory in bytes}}{\text{ weight-sized tensors in bytes}} = \frac{40\text{GB} \times 8 \times 10^9 \text{bytes/GB}}{4 \times \text{param. precision}} = \frac{80 \text{ billion bytes}}{\text{param. precision}}

$$

* `4` comes from weight-sized tensors `weight`, `gradient`, `momentum` and `variance` in a common training setup with `Adam` optimizer.

* `param. precision` here is the number of bytes per parameter. For full precision like `FP32`, it is 4 bytes per parameter. For half precision like `fp16` or `bf16`, it's 2 bytes per parameter.

Based on the formula above, we can derive a rough estimation of parameter count range from 20b to 40b. On the one hand, we haven't considered activation memory so the actual model size is slightly smaller. On the other hand, large DP size with model sharding allows us train larger models.

$$

\text{model params. count} \approx DP \times \frac{80 \text{ billion bytes} }{\text{param. precision}}

$$

For a full precision fully-sharded training on 4 nodes, we then have a rough computation

$$

\text{model params. count} \approx 4 \times \frac{80 \text{ billion bytes} }{4 \text{ bytes per param. }} = 80 \text{ billions}

$$

Again, this is very rough computation which doesn't consider activation memory and sharding stages but provide people a sense of "medium-scale" training.

When model sizes grow to tens or hundreds of billions of parameters, however, even TP within one node is not enough. That’s where **Pipeline Parallelism (PP)** comes into play.

---

### **3. DP + TP + PP (Inter-Layer Parallelism)**

Pipeline Parallelism (PP) partitions different segments (groups of layers) across multiple GPUs or nodes. During forward passes, activations are passed from one pipeline stage to the next, rather than storing the entire model’s parameters on a single GPU.

- PP requires relatively light communication because it only transfers partial activations for each micro-batch between stages.

- Consequently, PP is well-suited for cross-node scaling, where inter-node bandwidth is often more limited compared to intra-node bandwidth.

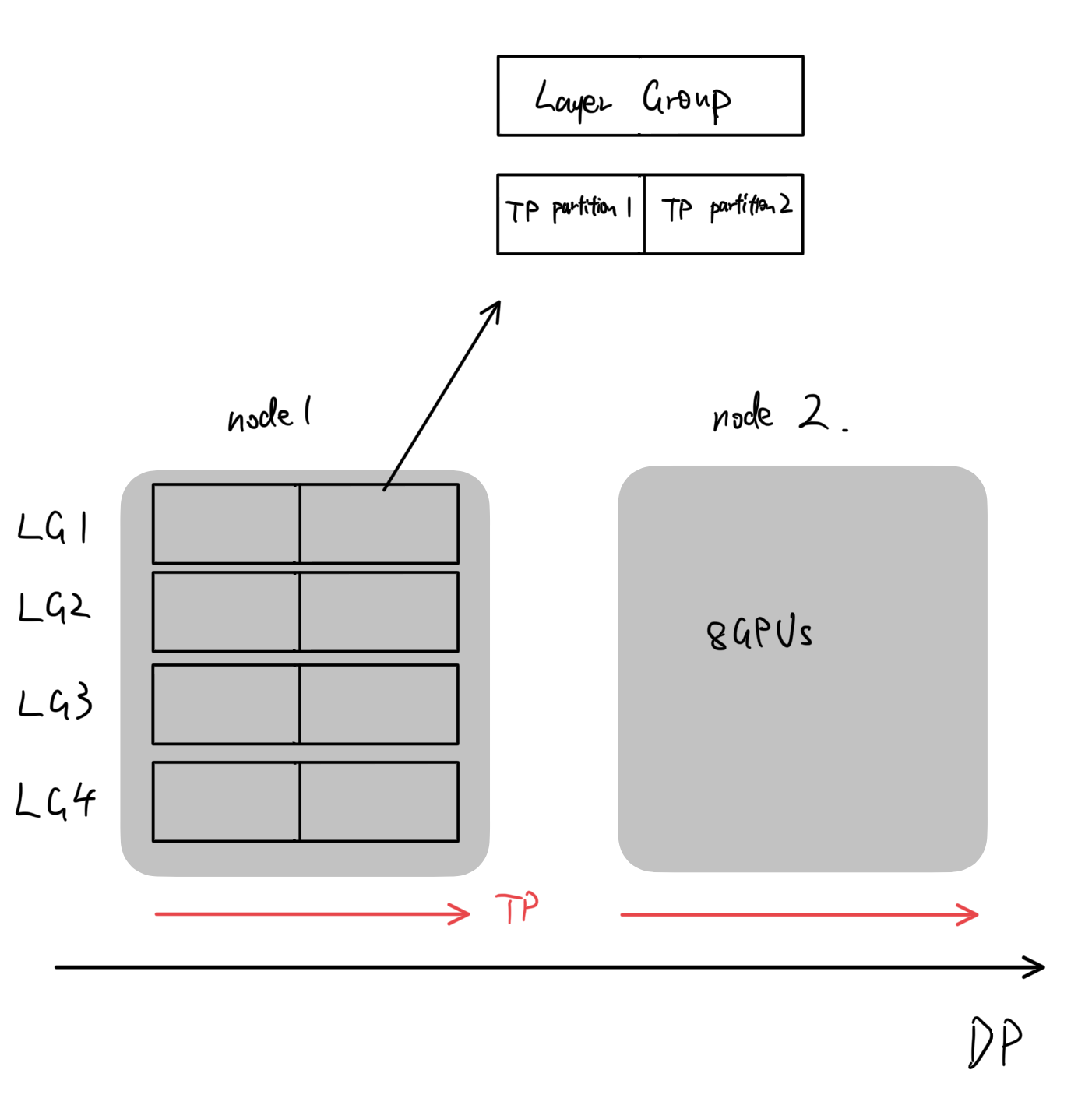

Conceptually, MP (Model Parallelism) = TP + PP. Each MP unit contains a model replication, and we are interested in how to partition each replicatin by inter-layer(PP) and intra-layer(TP).

**3D Parallelism**: Combining DP, TP, and PP.

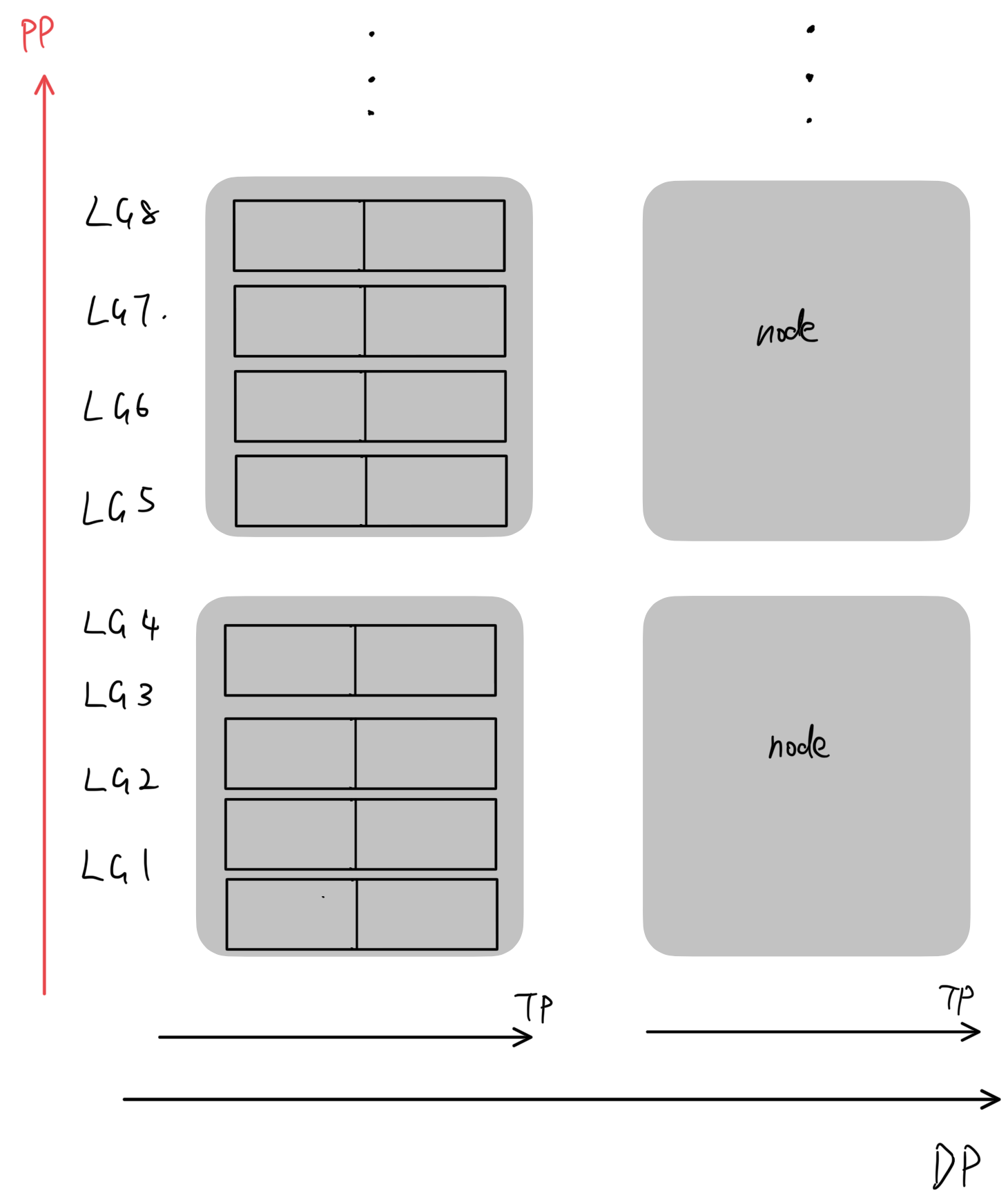

1. **Logical View I** (Same MP scale as TP-only)

- Each node still stores the same fraction of the model as in the “DP + TP” case, but we now add pipeline stages. For instance, if the model is split into four layer groups for PP, each group is further divided among two TP partitions.

2. **Logical View II** (Scaling PP)

- PP can be scaled with additional nodes to cover more layer groups.

- Each pipeline stage holds fewer layers, reducing the memory burden per GPU.

3. **Logical View III** (Scaling PP + Larger TP)

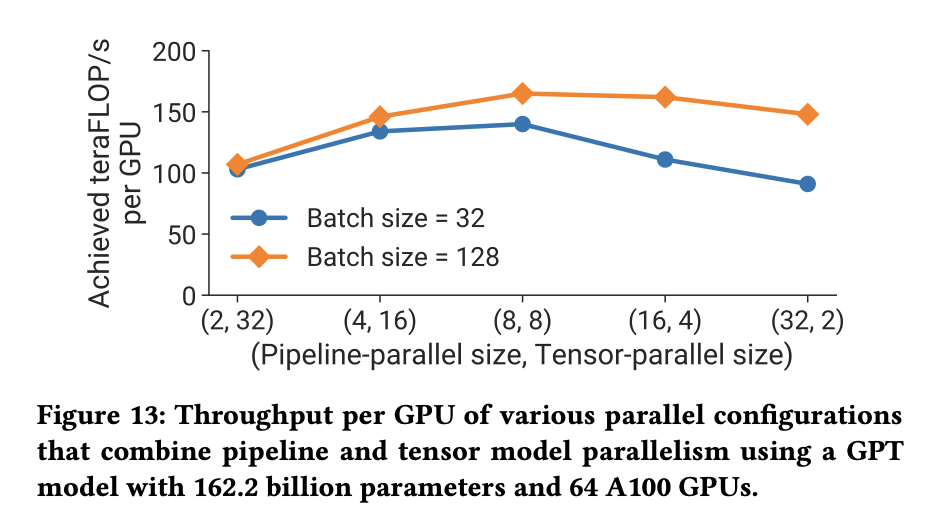

- When pretraining very large models, you want to achieve an optimal combination of PP and TP to maximize throughput.

- Research has shown that, for a given total MP (e.g., 64-way model parallelism), `TP=8` yields the best training efficiency in terms of FLOPs utilization.

By combining these three techniques, you can effectively scale models to hundreds of billions of parameters.

---

### **DP + TP + PP + SP/CP**

As model dimensions (hidden size $h$) and sequence lengths ($s$) increase, activation memory can also become massive. Recall that activation memory scales roughly with $b \times s \times h$. Large $s$ and $h$ leads to immense activation storage and communication demands.

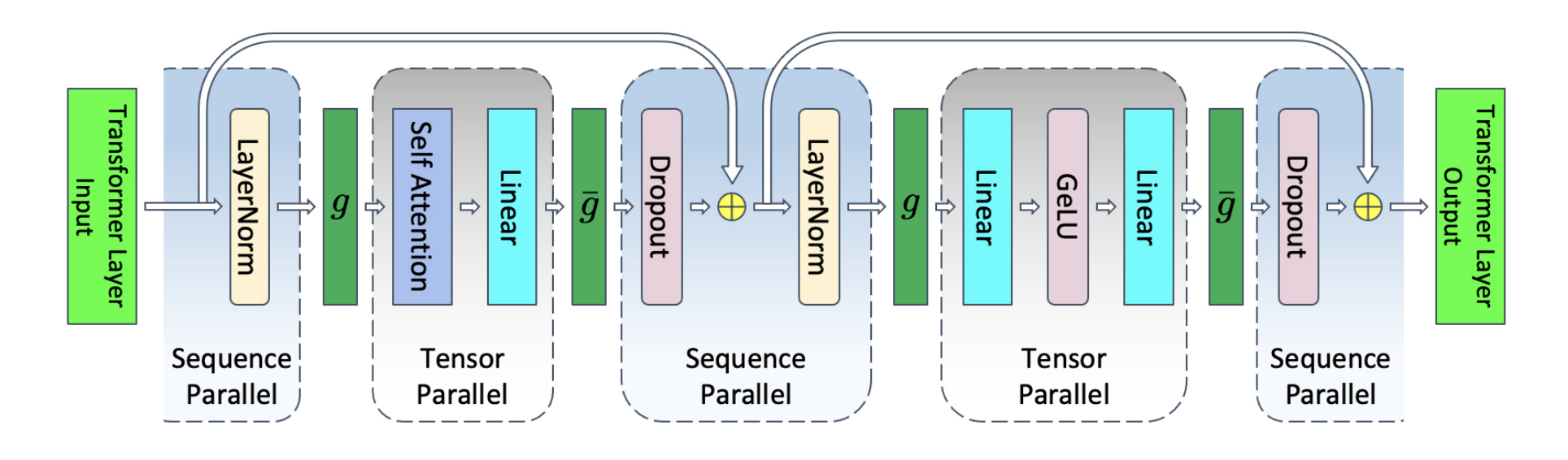

#### **Sequence Parallelism (SP1) by NVIDIA**

NVIDIA’s [Sequence Parallelism](https://arxiv.org/pdf/2205.05198) focuses on positions in the Transformer block where TP cannot partition activations—namely, Dropout and LayerNorm. Since they are element-wise operations, SP can distribute those activations across devices, reducing redundant copies. This method further cuts activation memory from $b \times s \times h$ to $\frac{b \times s \times h}{TP}$ in those layers.

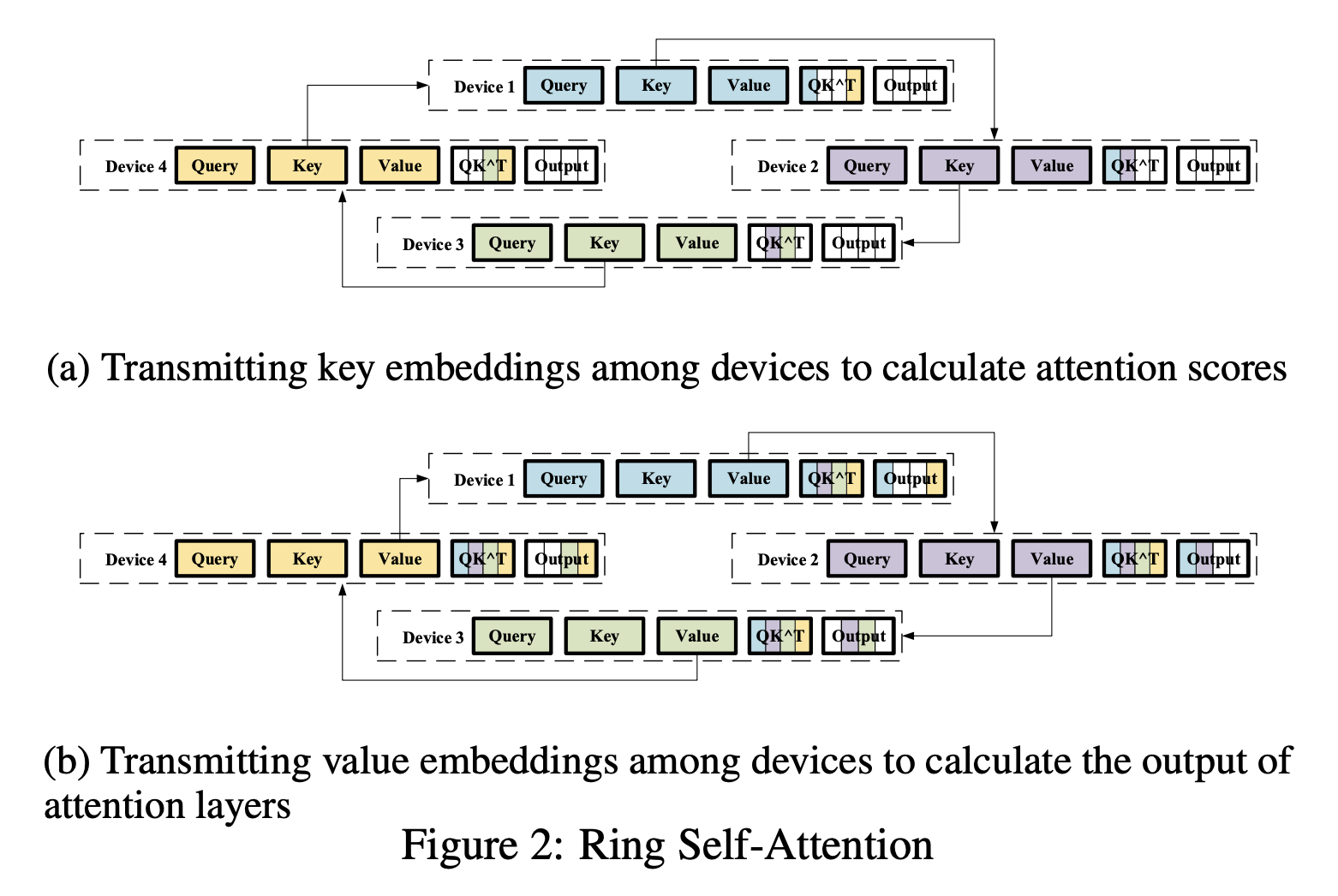

#### **Sequence Parallelism (SP2) by NUS (a.k.a. Context Parallelism)**

Another form of [Sequence Parallelism](https://arxiv.org/pdf/2105.13120) from NUS—later extended to [Context Parallelism](https://arxiv.org/pdf/2411.01783)—also partitions activations along the sequence dimension. However, it involves more systematic communication (ring-attention) to distribute $Q, K, V$ blocks across GPUs. This approach ultimately partitions *all* activations (including ones at self-attention and MLP layers) to $\frac{b \times s \times h}{TP}$.

Both versions, published around May 2022, address the same overarching goal—reducing activation memory—but with different target activations and communication strategies.

---

### **Example Hybrid Parallelism Graphs**

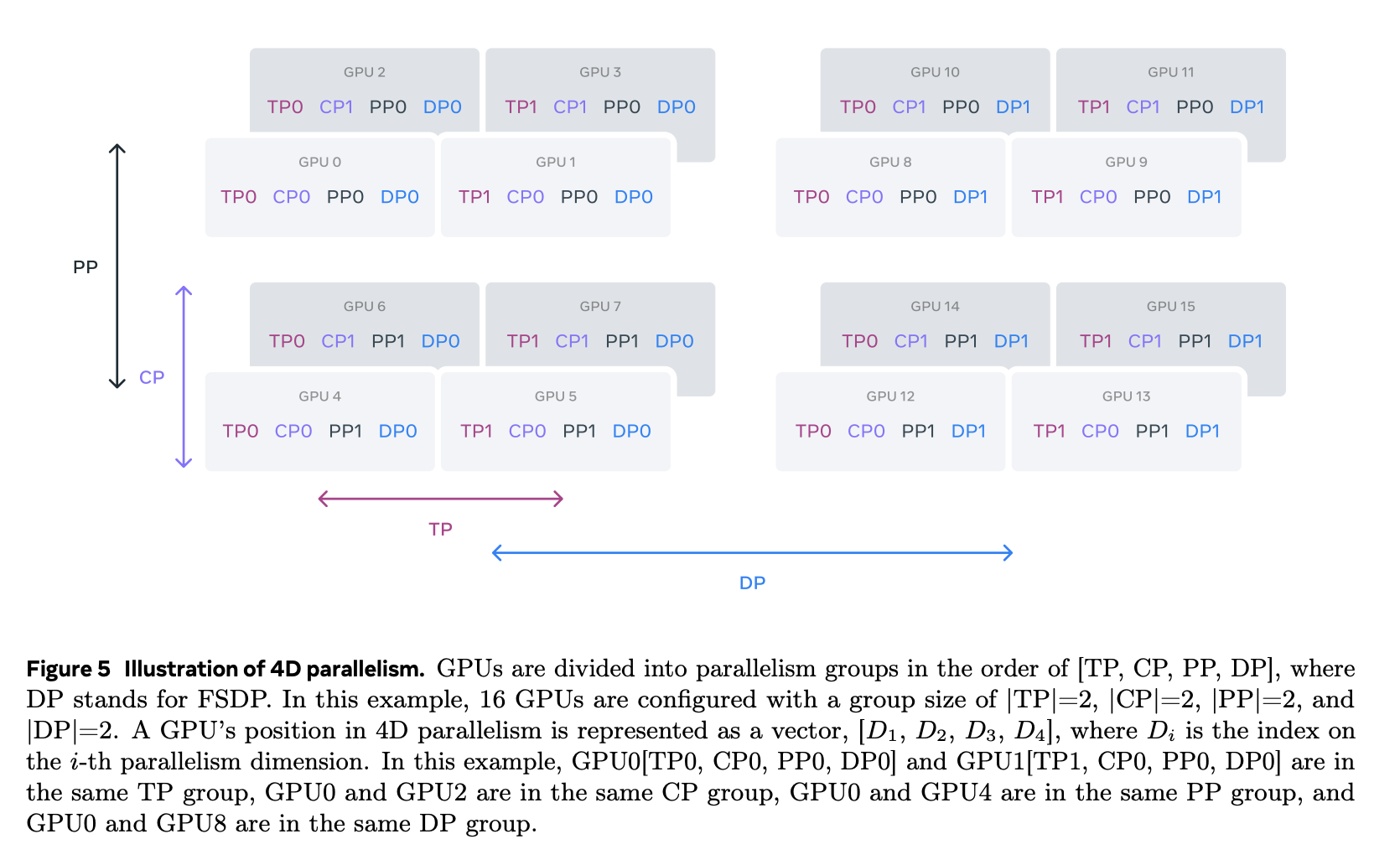

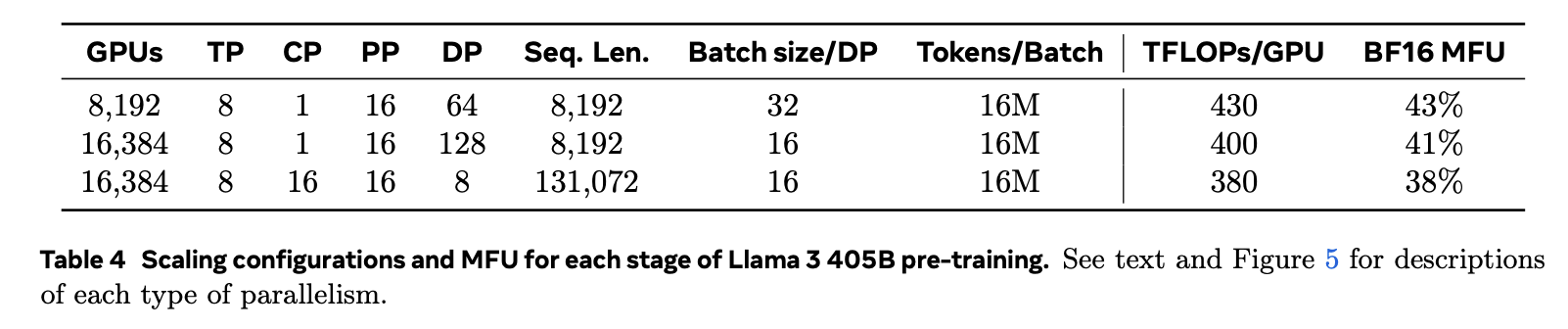

- **Llama 3**

Typically uses `TP=8` and scales PP to 16 for large models.

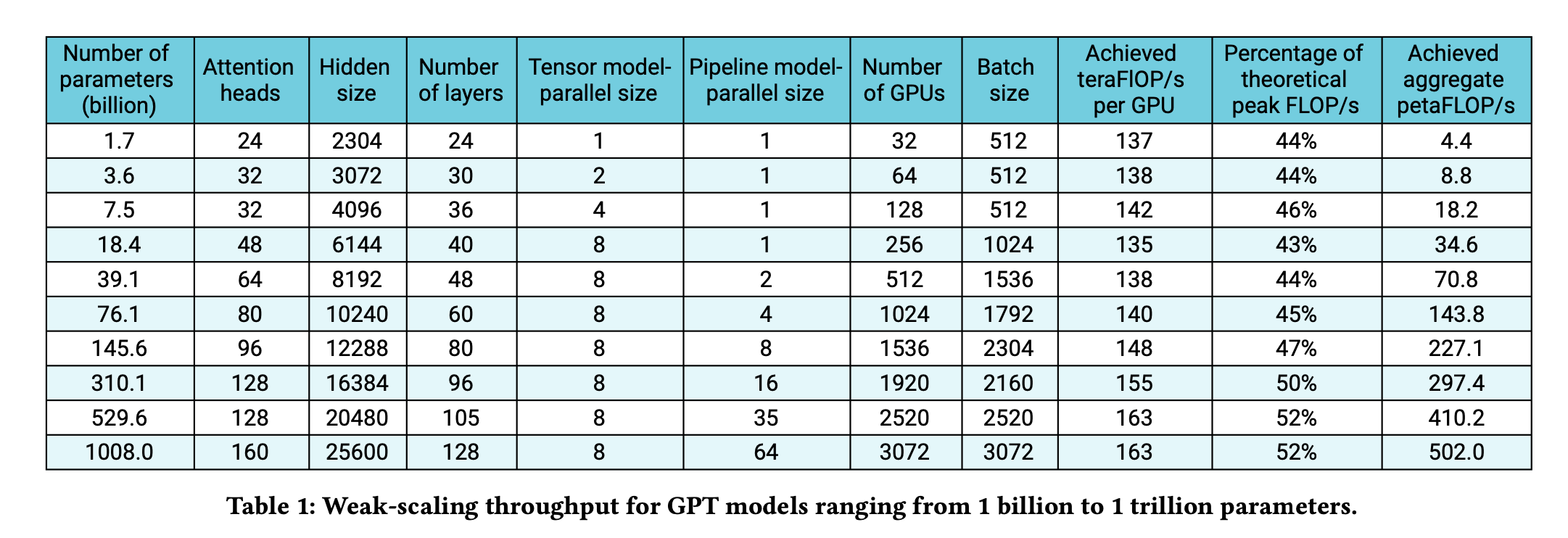

- **[GPT Scaling]((https://arxiv.org/pdf/2104.04473)) (Megatron-LM)**

Shows how TP scales from 1 to 8 while PP scales up to 64, covering a wide range of model sizes.

---

### **How to Decide Parallelism Sizes in 4D (DP, TP, PP, SP/CP)**

Instead of deciding DP size first, large-scale training frameworks typically choose parallelism sizes in a somewhat reversed order:

1. **Start with TP and PP** to ensure you can fit one replica of the model across available hardware.

- Always keep TP within a single node (common choices: TP=2, 4, or 8).

- Use PP across multiple GPUs or nodes for minimal communication overhead and maximum scalability.

2. **Incorporate SP/CP** if your sequence length $s$ becomes large and you need to reduce activation memory further.

3. **Finally, scale DP** for more data throughput and to utilize additional nodes, each of which holds a complete model replica (or a fully sharded version).

During pretraining with massive sequence lengths, SP/CP can provide significant memory savings by splitting or distributing activations. Meanwhile, you can combine these methods with other memory optimizations (e.g., ZeRO or gradient checkpointing) to further reduce resource usage.

---

### **Conclusion**

As models grow toward the trillion-parameter range and require increasingly longer context windows for inputs (text, video, or even multimodal data), advanced parallelism techniques become essential. By layering DP, TP, PP, and SP/CP, you can tackle the demands of massive model sizes and long-sequence processing. This blog highlights the key ideas behind hybrid parallelism, which will undoubtedly pave the way for the future of large-scale model training.

## Q&A

#### Q1: What study suggests `TP=8` gives the best training efficiency?

As the figure from [MP paper](https://arxiv.org/pdf/2104.04473) suggests, given `MP=64`, `TP=8` gives provides the best training efficiency (measured by FLOPs).

Comments