Accelerating Deep Learning: High-Speed Data Transfer

Memory, HBM, SRAM, GPU, GPU Cluster

### Industry Context

Recent industry movements highlight the importance of high-performance data transfer for large-scale distributed deep learning workloads. For instance, Nvidia’s continued investments (e.g., [acquisition of Mellanox](http://nvidianews.nvidia.com/news/nvidia-to-acquire-mellanox-for-6-9-billion) specializing in high-speed inter-node communication) underscore how critical networking solutions have become. Meanwhile, [Broadcom’s stock surge](https://finance.yahoo.com/news/broadcoms-value-surged-324-billion-183816932.html#) and [Enfabrica’s substantial fundraising round](https://www.businesswire.com/news/home/20241119607725/en/Enfabrica-Raises-115M-in-New-Funding-to-Advance-its-Leadership-in-AI-Networking-Solutions) further reinforce the growing significance of robust network infrastructure and interconnect technologies in modern HPC (High-Performance Computing) and AI clusters.

### Communication Bottlenecks in Large-Scale Training

As discussed in [this data-parallelism blog post](https://hepengfei.ml/blog/data_parallelism), a tree-like communication approach often suffers from significant bottlenecks. When many GPUs or nodes communicate simultaneously in a hierarchy, peak load occurs on certain links, limiting the overall throughput. Ideally, we use more direct communication patterns, reduce communication volume, or overlap communication with computation to mitigate such bottlenecks.

### Data Transfer Rates and the Roofline Model

Data transfer rate (in bits/second or bytes/second) is as critical as raw compute throughput. In deep learning contexts, gradient exchange can be just as time-consuming as forward and backward passes. The Roofline Model helps identify whether the system is compute-bound or memory-bound:

$$

\text{Operational Intensity} = \frac{\pi}{\beta},

$$

where $\pi$ is the compute operations per second, and $\beta$ is the bandwidth. Modern GPUs often operate in a regime where memory or I/O speed is the constraining factor, especially as GPU compute units can outpace memory read/write (W/R) speeds.

### Memory Hierarchy for Model Training

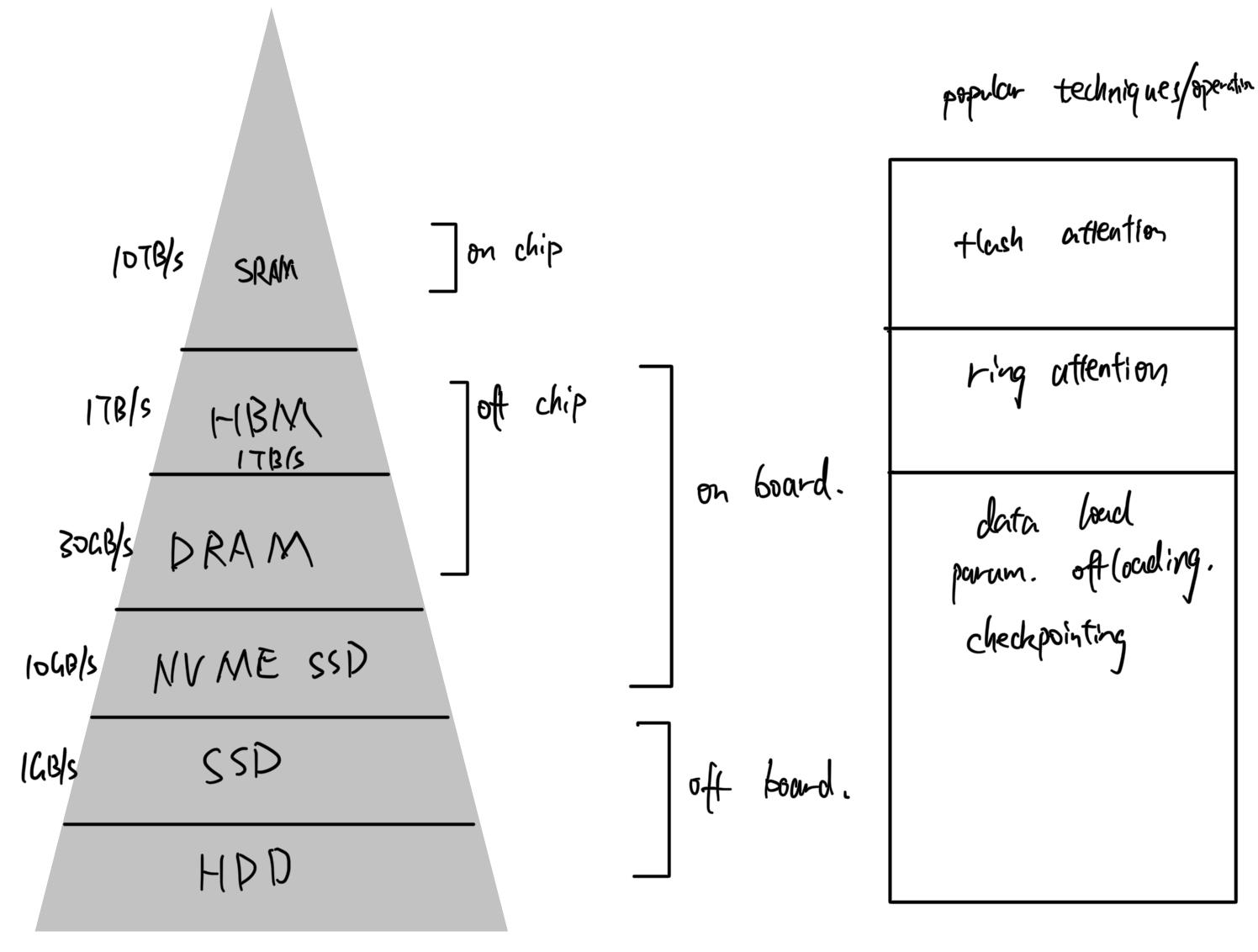

Model training touches almost every layer of the memory hierarchy:

- **GPU On-Chip (SRAM)**: Fastest, used for registers and shared memory.

- **GPU HBM**: High-bandwidth memory co-located on the GPU package.

- **CPU DRAM**: Main system memory for data staging.

- **NVMe SSD (on-board)**: Attached via PCIe lanes, faster than traditional SATA SSDs.

- **External SSD/HDD**: Usually over a cable or network; primarily for long-term storage.

The speed (on the left) specified is a rough estimation of general R/W speed for those memories. They are provided for readers to get a sense of their speed differences in terms of different order of magnititude.

Transferring data between these layers is bottlenecked by the slowest medium involved, and write speeds are often lower than read speeds.

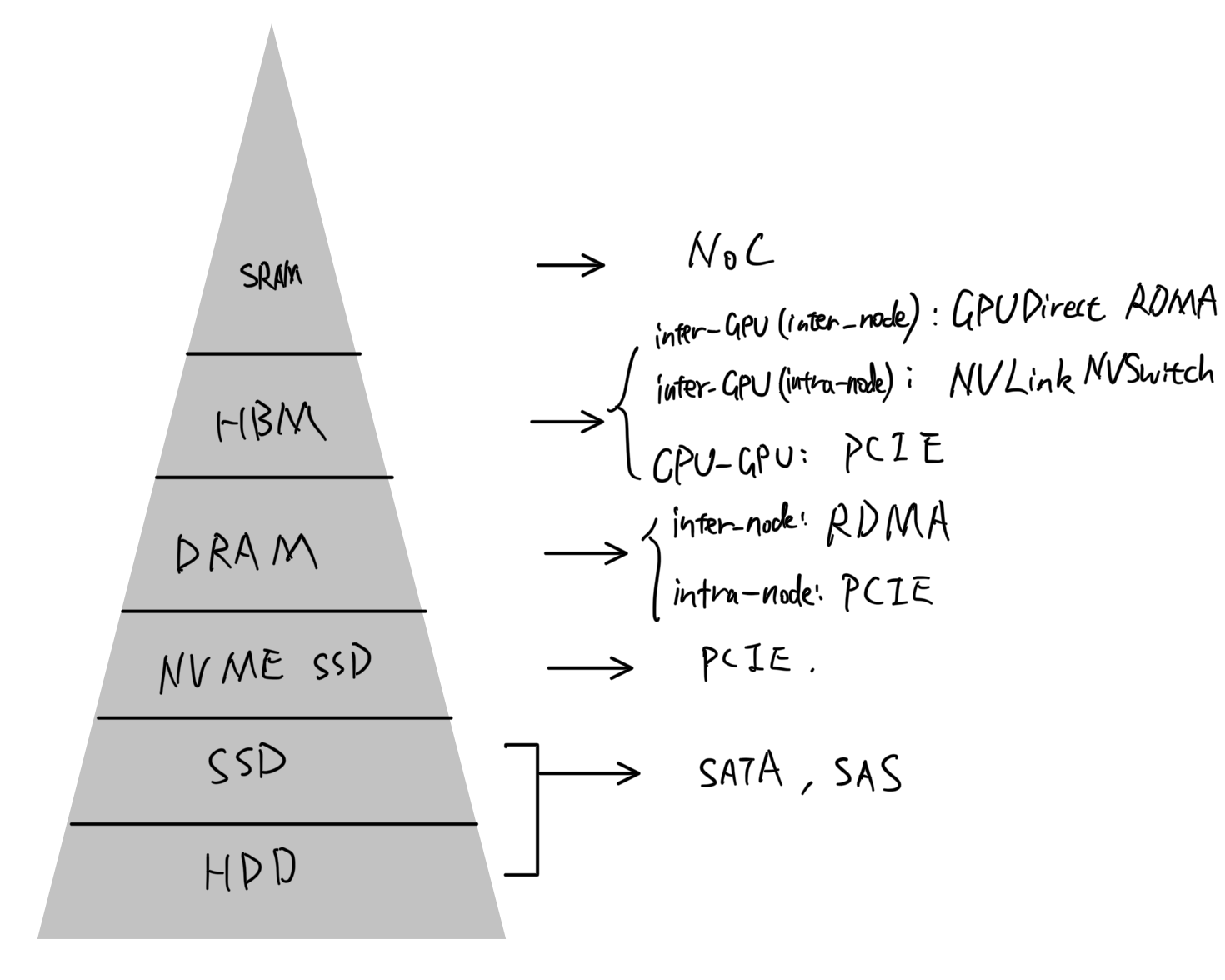

### Data Transmission for Memory Hierarchy

* SRAM utilizes Network On Chip (NoC) for fast communication between SRAM and HBM.

* HBM has several different communication technologies

- In a cluster, it communicates with other nodes/GPUs through NIC by GPUDirect RDMA technology. It doesn't go through PCIE lanes.

- Within a node, it communicates with other GPUs on the node by NVLink or NVSwitch for model training. Personal workstations and desktops often use PCIe lanes or two-GPU NVLink. Data center nodes utilize eight-GPU NVSwitch in large-scale systems. The transfer speed is similar to SRAM which ensures non-blocking synchronizing during training.

- Within a node, it transfers data or loads/offloads params. through PCIE between HBM and memories including DRAM, NVME SSD.

#### Communication Types

##### Basic operations

- **Point-to-Point (P2P)**: Direct GPU-to-GPU or node-to-node communication. Its operation is often denoted as `send` and `rev`. Think about device A send activations to device B. For device A, it does a `send` operation. For device B, it does `rev` operation.

- **One-to-all**: `broadcast`

- **All-to-one**: `reduce`, `gather`

- **Many-to-One**: `gather`

- **One-to-Many**: `scatter`

##### Combined operations

- `reduce-scatter`, `all-gather`

- **All-to-All**: Built upon multiple P2P links, can be more bandwidth-intensive. Common operations are

* `ring-all-reduce`: `ring-reduce-scatter` + `ring-all-gather`. It's used in DDP, communication volume is streamed to be even due to ring-based communication.

* `tree-all-reduce`: it's used in DP which is based on parameter server, and the peak communication for parent node is high.

### Parallelism Techniques and Their Communication Volume per GPU

- **Data Parallelism (DP)**: Minimal forward communication, but a large **all-reduce** step for gradients—$2\theta$ data per backward pass. In general, it can be optimized by quantized gradients, async gradient updates so the communication priority in hybrid parallelism is actually the lowest.

- **Tensor Parallelism (TP)**: All-reduce steps ($2\theta$) in both forward and backward passes, leading to higher communication overhead than DP.

- **Pipeline Parallelism (PP)**: Pipelined micro-batches invovles light activation communication. The communication volume at a time is $\frac{bsh}{M}$ where $b$ is batch size for each training step and $M$ is the number of micro-batch.

- **Context Parallelism (CP)**: Splits activations (e.g., for large sequence lengths) across GPUs. It communicates activations by ring-attention and each partitioned activation (along seq. dimension) takes $\frac{bsh}{CP}$.

In general, the communication need inequality for them in hybrid parallelism is TP > CP > PP > DP. Such an inequality can help us decide the parallelism group for the best training efficiency.

### Kernel-Level Improvements

Optimizations like **Flash Attention** reduce the amount of data transferred to on-chip memory (SRAM) by computing attention blocks more efficiently. Combined with specialized warp scheduling strategies, these techniques alleviate bottlenecks inside GPUs.

### Storage and Checkpointing

- **Checkpointing** transfers model parameters from GPU HBM to SSD/HDD, typically the largest I/O operation during training. Faster SSDs with dedicated PCIe lanes can help.

- **Async Checkpointing** and advanced data loaders (e.g., tree-based data loader in [MegaScale](https://arxiv.org/pdf/2402.15627)) can reduce overhead by overlapping checkpoint writes with computation or by caching data at the node level before distributing to individual GPUs.

### Conclusion

Balancing raw compute with efficient data transfer across all memory tiers is crucial in modern deep learning infrastructures. As compute capabilities grow faster than memory bandwidth, the Roofline model teaches us that many workloads will be memory or I/O bound. By employing parallelism techniques with careful communication planning and leveraging advanced networking hardware, training at HPC scale can be significantly accelerated.

Comments